The Scheduling System That Saved Millions

Tamed a three-headed scheduling beast into workflows that practically schedule themselves.

Challenge

Breezeway helps property managers elevate guest experiences by streamlining operations. When I joined the company, we were juggling three versions of our task scheduling system. One was self-serve. Two were white-glove and fully custom. Together, they supported 691 client accounts, and none of them worked well at scale.

Our biggest customers managed 500+ vacation rentals and kept running into walls: limited to six scheduling rules, no advanced logic, and no way to self-serve more complex workflows. Meanwhile, competitors were offering bloated (but powerful) automation tools that overwhelmed non-technical users.

With $1.2M in ARR at risk, we had to build something better:

Our biggest customers managed 500+ vacation rentals and kept running into walls: limited to six scheduling rules, no advanced logic, and no way to self-serve more complex workflows. Meanwhile, competitors were offering bloated (but powerful) automation tools that overwhelmed non-technical users.

With $1.2M in ARR at risk, we had to build something better:

- Strong enough for enterprise operations

- Simple enough for non-technical users

- Unified enough to replace three fragmented systems

Solution

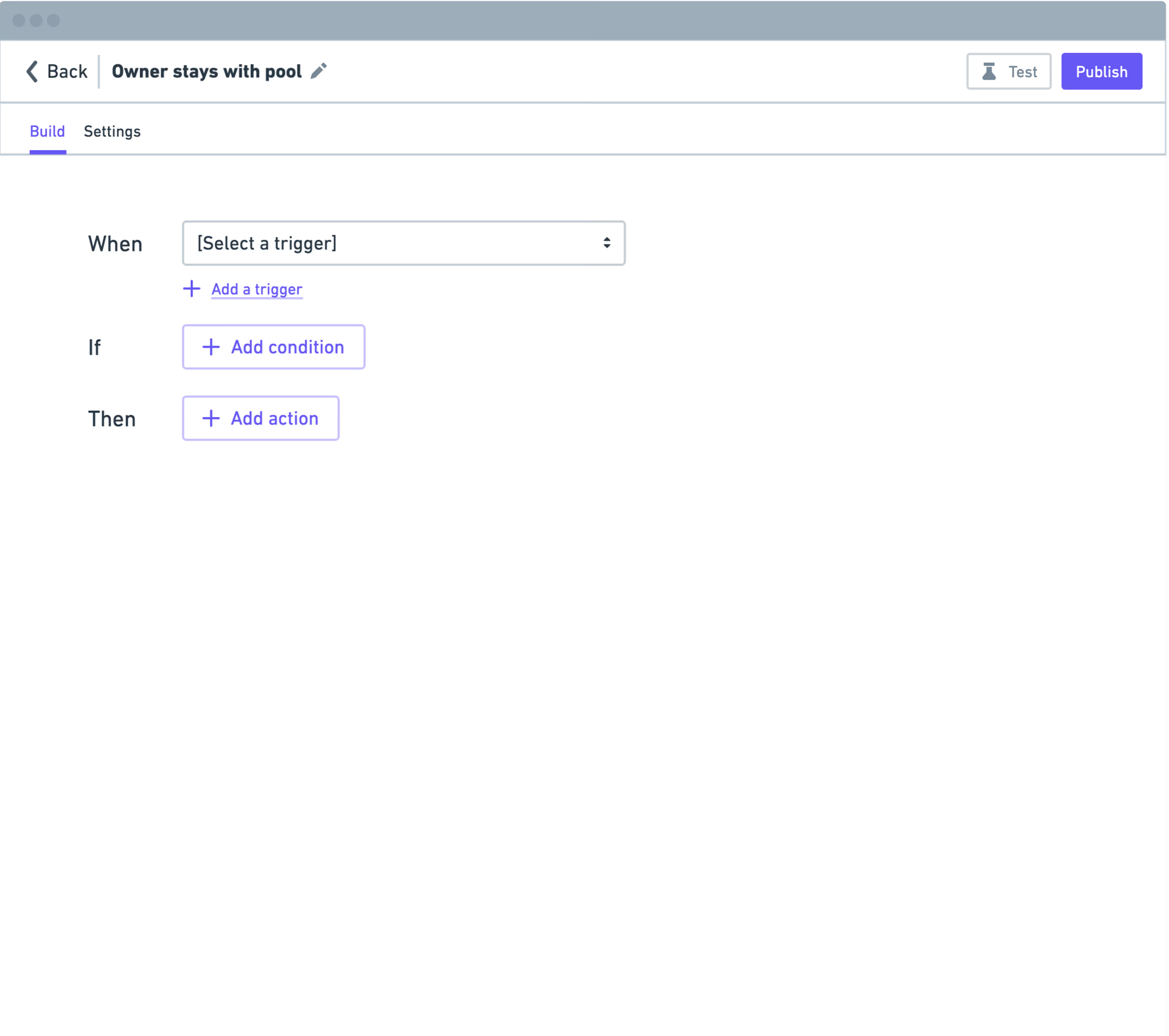

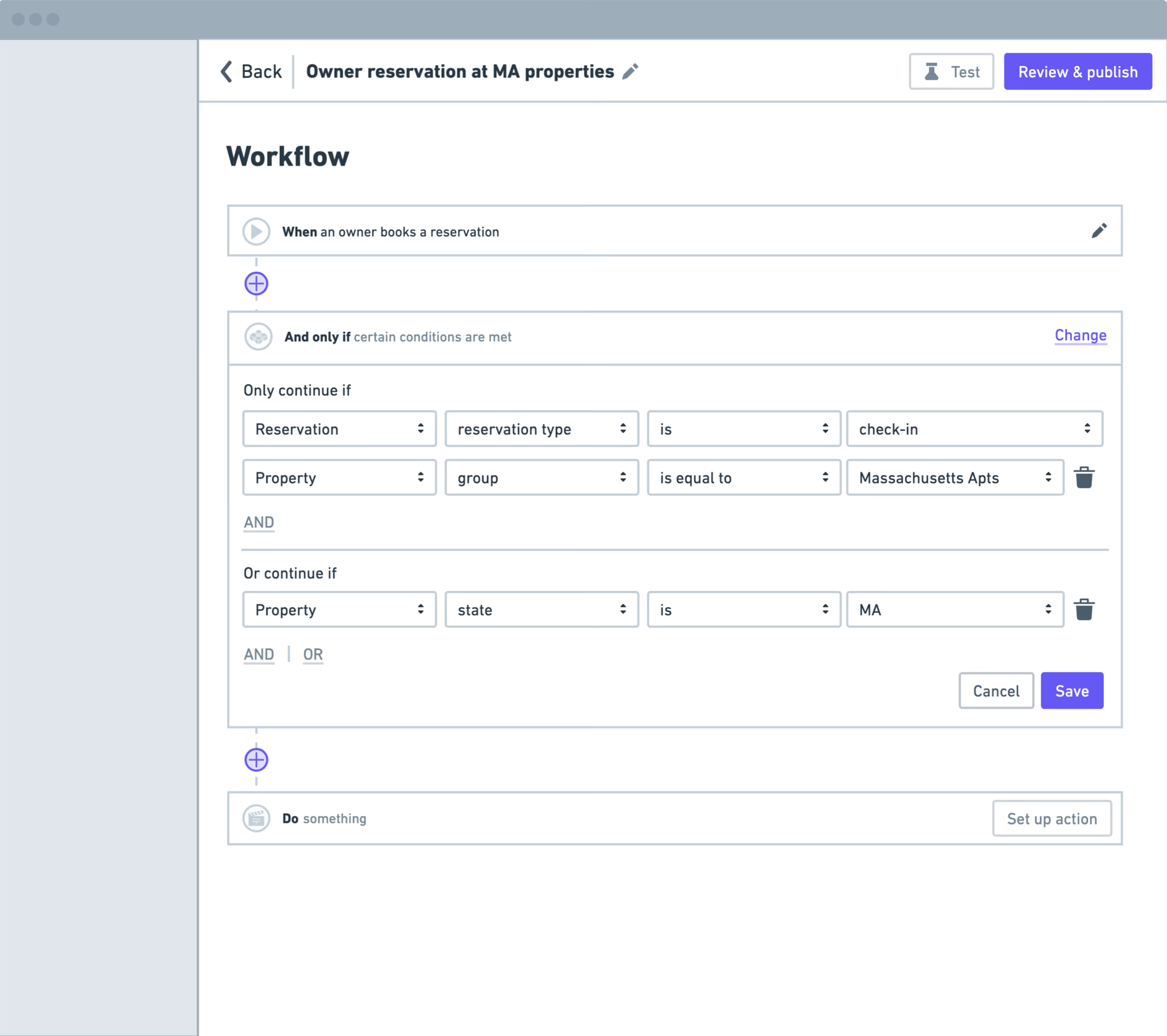

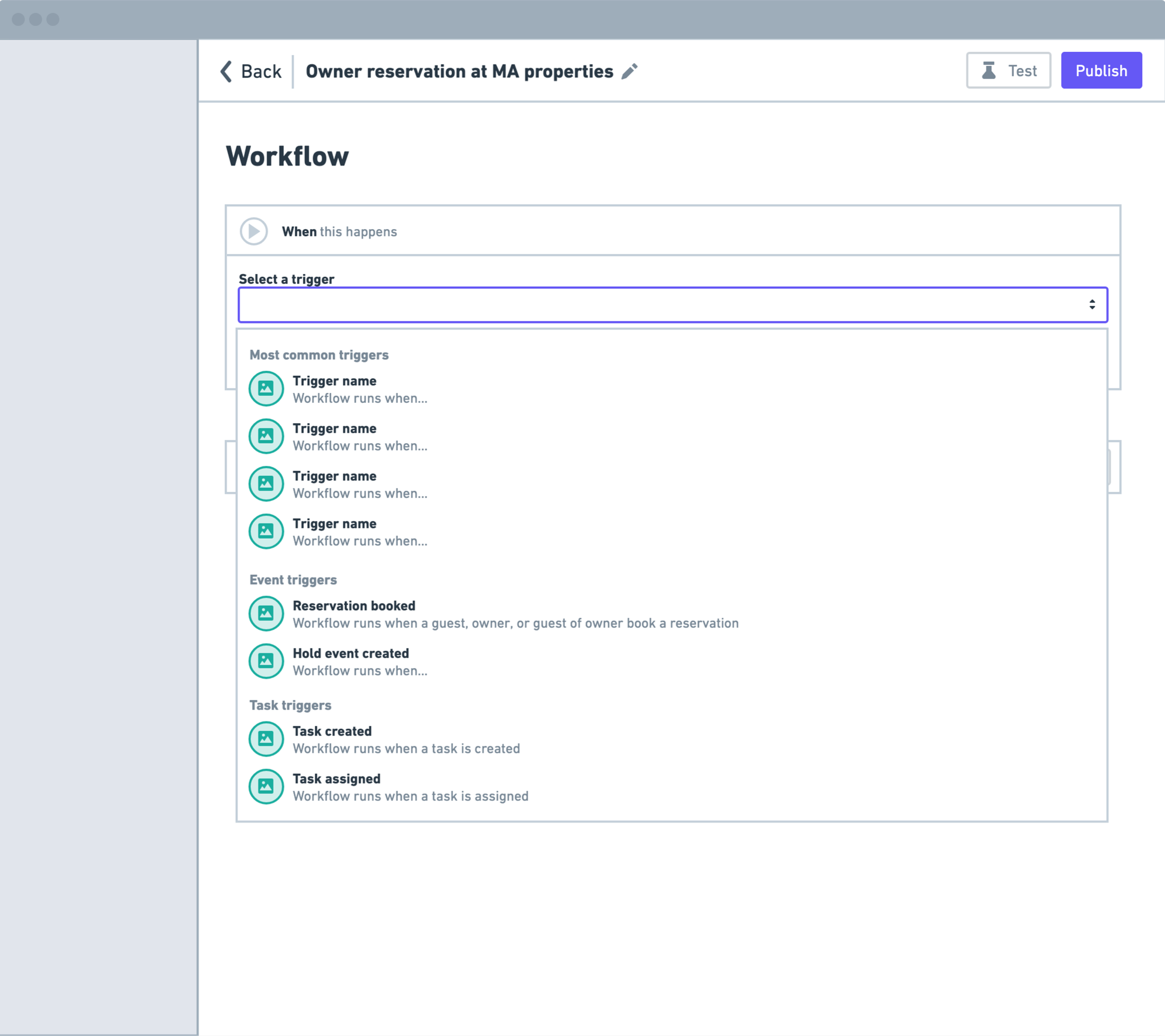

We ditched the complex, industry-standard canvas interfaces for a simpler, form-like approach that still followed automation standard steps: pick a trigger, set conditions, choose actions. Through stakeholder workshops and user validation, we built a trigger-condition-action model that rolled out in strategic phases from basic rule creation to turn-specific scheduling to advanced offset logic.

What we impacted

Business Impact

- ARR Growth: The new consolidated tool supported the Sales team in closing new enterprise deals, fueling growth from $7M → $12.7M ARR.

- Retention: We retained 100% of at-risk clients ($1.2M in ARR) by replacing fragmented custom solutions with a unified self-service system.

- Operational Efficiency: Reduced monthly feature requests by 90%, freeing up engineering and customer success bandwidth for higher-leverage initiatives.

Customer Experience Impact

- CSAT: Jumped from 50% → 81%, marking the highest satisfaction increase since launch.

- Reliability & Trust: Consolidating three fragmented scheduling systems into one unified tool eliminated recurring points of failure and simplified both setup and ongoing management for users.

Organizational Impact

- Node components became shared design system components. The Messaging team adopted them for their automation builder.

- Improved alignment between Product, Engineering, and Sales through a unified data and scheduling model.

What I learned

- Backend decisions matter as much as frontend ones. We built milestone 1 to look like a simple form. Behind the scenes, we structured it as separate nodes, which let us ship fast and break the interface apart later when we needed more complexity. It also meant other teams could reuse our components.

- I learned to validate fast. Our milestone 1 build wasn't perfect. But testing with four property managers in parallel surfaced gaps early. We adjusted in M2 and M3. Shipping imperfect work beats waiting for perfect work.

- Own your mistakes out loud. I showed the team an ambitious vision that scared them. The pushback stung but it was fair. I simplified, focused on what we could actually build, and kept moving. Sometimes leading means knowing when to pull back.

How we tackled it

↓ Jump to Final Product ↓

Understanding the problem space

Before we could build something better, I needed to understand why three systems existed in the first place.

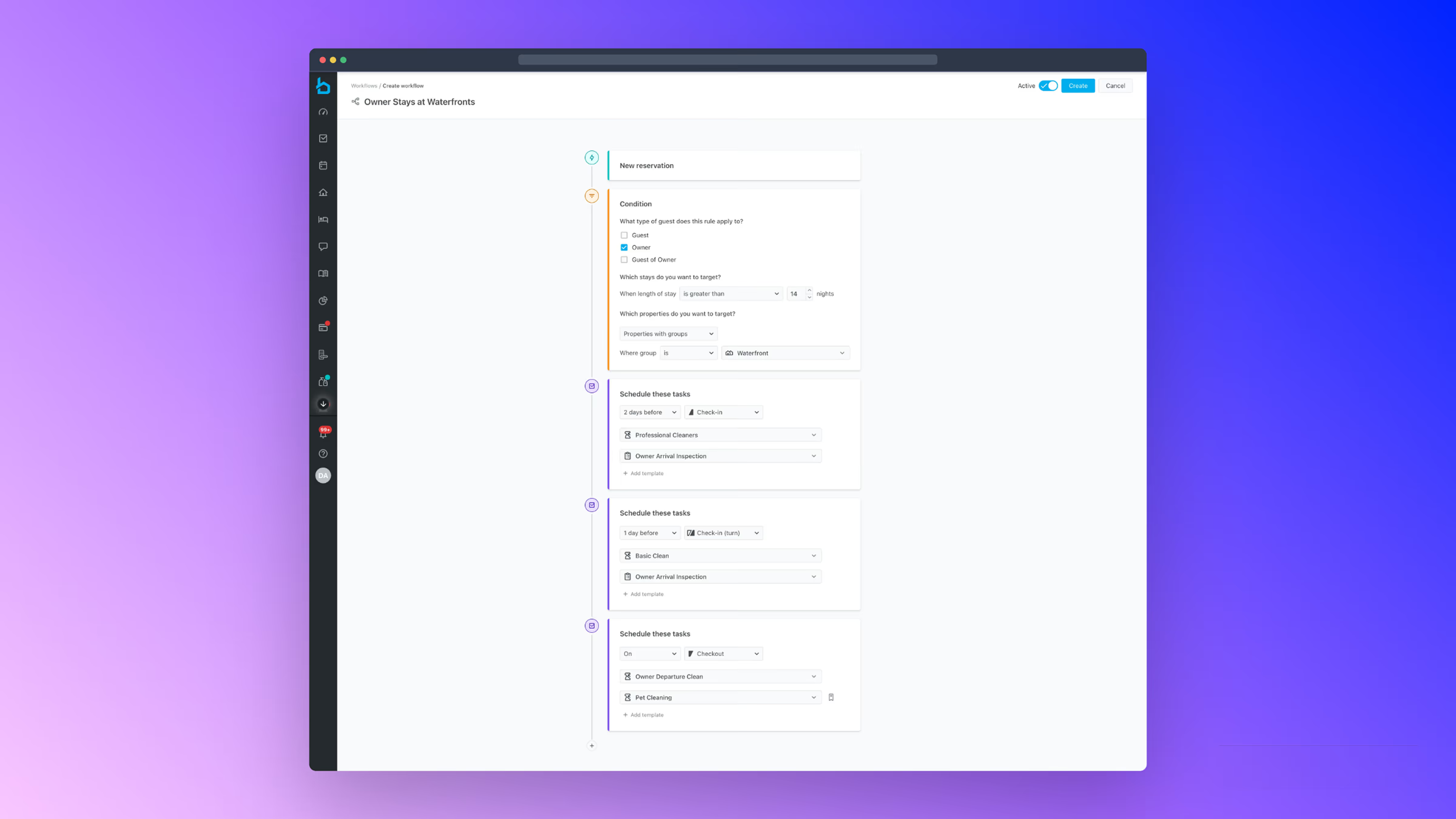

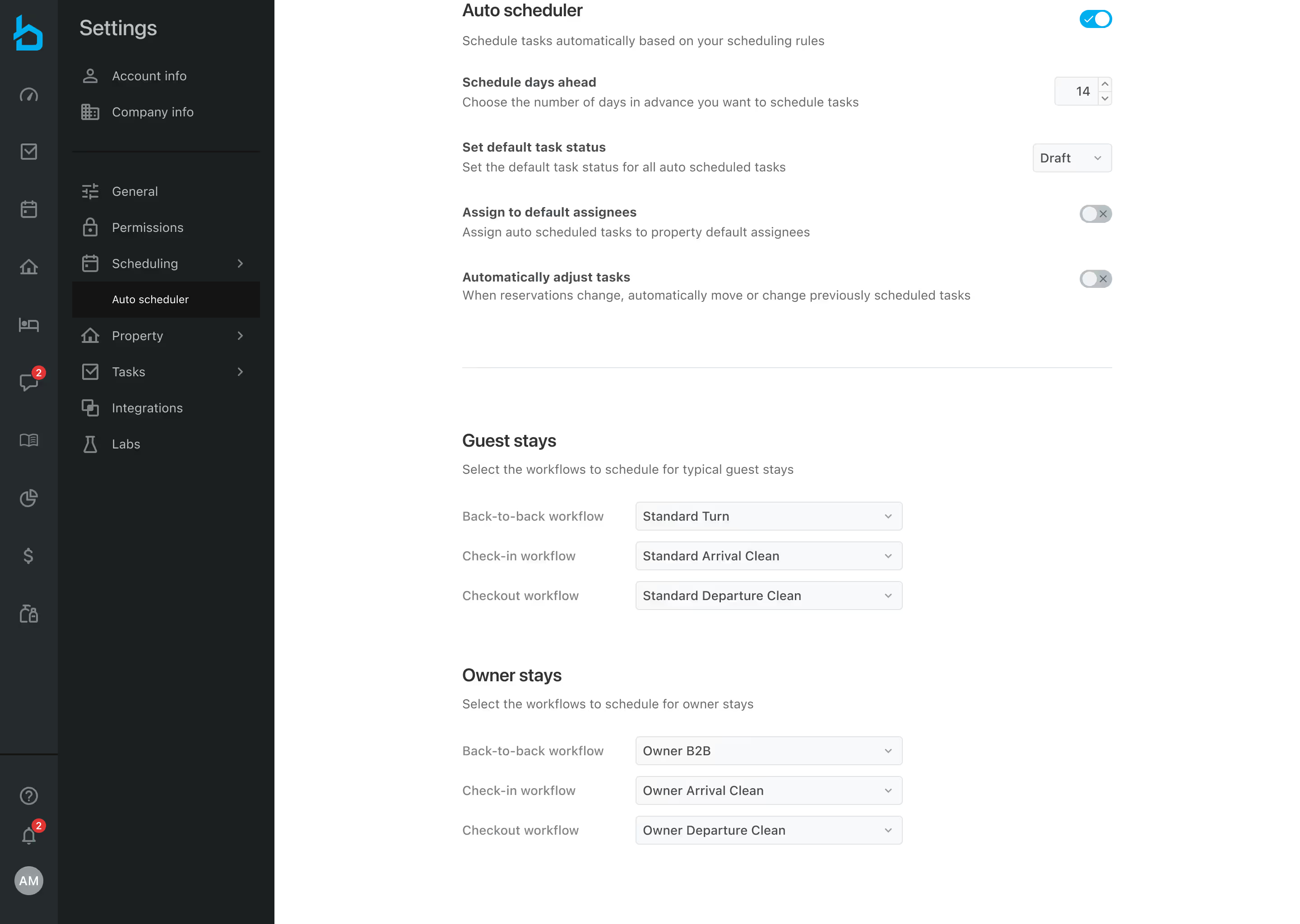

The V1 Interface

V1 was our only self-serve option. Users got six rules split across Guest Stays and Owner Stays. One workflow per reservation event (check-in, checkout, or same-day turns when one guest checks out and another checks in). It worked for simple operations, but enterprise clients needed way more flexibility.

Auto scheduler V1

Caption: In V1, we called these "back-to-back" workflows. We later standardized the term to "turns" in the final product.

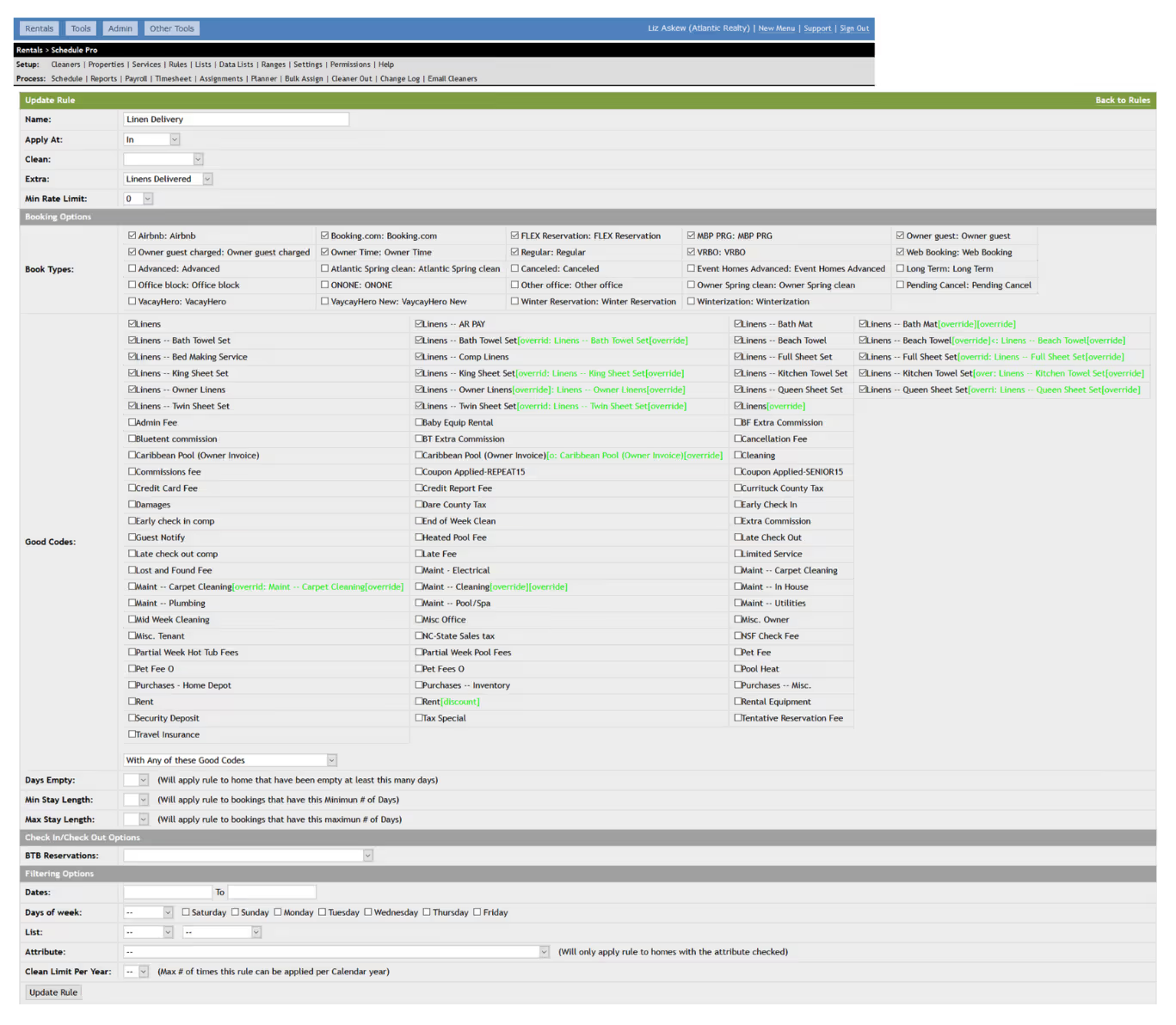

The White-Glove Bottleneck

V2 and V3 didn't have an interface. Instead, clients described what they wanted in a spreadsheet, support translated it into engineering tickets, and weeks later, rules went live on their calendar. The whole process took 1-3 weeks for a single rule to be implemented.

Even after all that waiting and work across multiple teams, clients would look at what we built in a sandbox and either not understand it or realize it didn't quite work how they expected. Sometimes, the unexpected functionality would prompt the client to request changes, which elongated the process further.

Even after all that waiting and work across multiple teams, clients would look at what we built in a sandbox and either not understand it or realize it didn't quite work how they expected. Sometimes, the unexpected functionality would prompt the client to request changes, which elongated the process further.

Auto scheduler v2

Auto scheduler v3

Studying What already existed

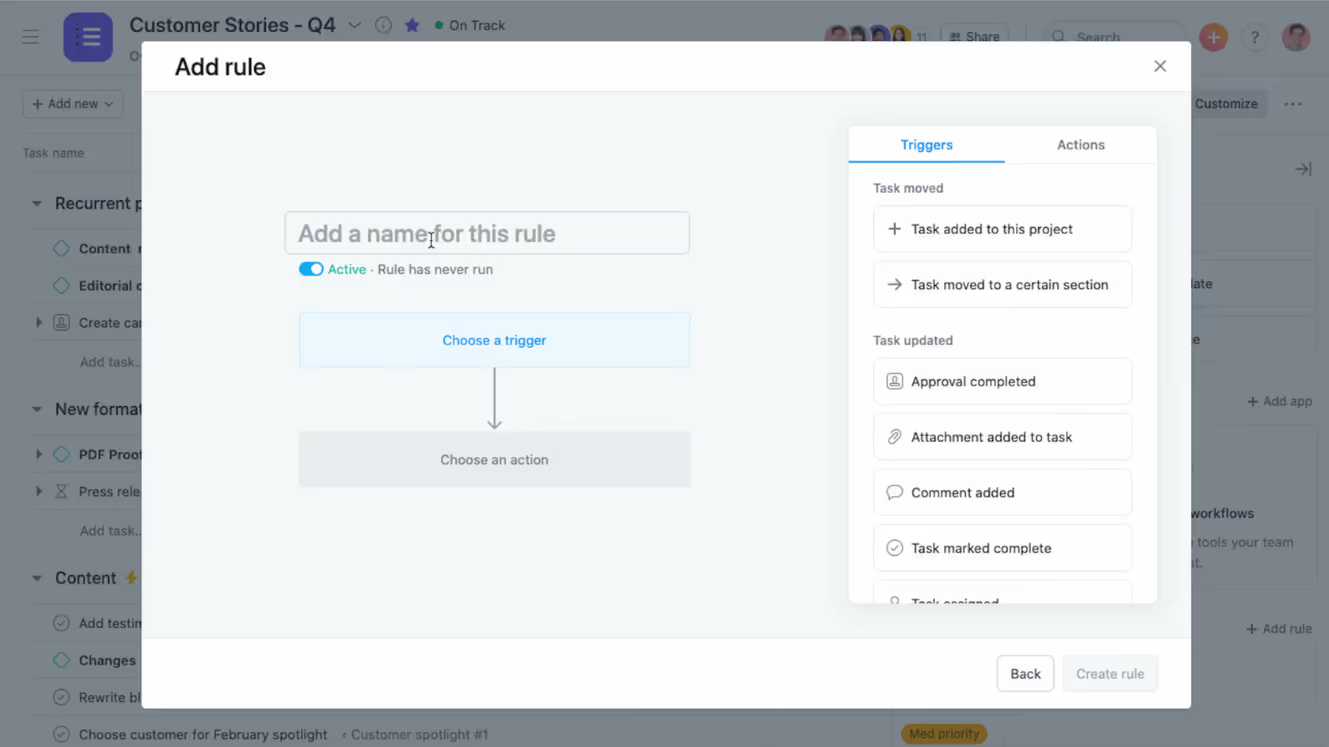

I audited 8 automation platforms to understand what flexible patterns could apply to our redesign. I looked at direct competitors like LSI Tools, project management tools like Asana, and workflow builders like Zapier.

LSI Tools

The flexibility across all these platforms was impressive, but the ones with the worst UX were overwhelming with feature-rich interfaces that dumped too much information at once.

The more approachable examples used plain-language patterns ("When this → do that") and progressive disclosure principles. They didn't show you everything upfront. They revealed complexity as you needed it. Asana and Monday.com stood out for their clarity and simplicity. That insight stuck with me. We could give users power without drowning them in options.

Monday.com

Asana

Starting Broad, then focusing

My early wireframes explored automation beyond task scheduling. What if we could automate messaging? Property updates? Task management itself?

Engineering and Support had already provided us with common V2 and V3 use cases. But I didn't want us to box ourselves in. I wanted to consider what else automation could unlock across the platform.

Engineering and Support had already provided us with common V2 and V3 use cases. But I didn't want us to box ourselves in. I wanted to consider what else automation could unlock across the platform.

Early Wireframe explorations

Intercom-inspired

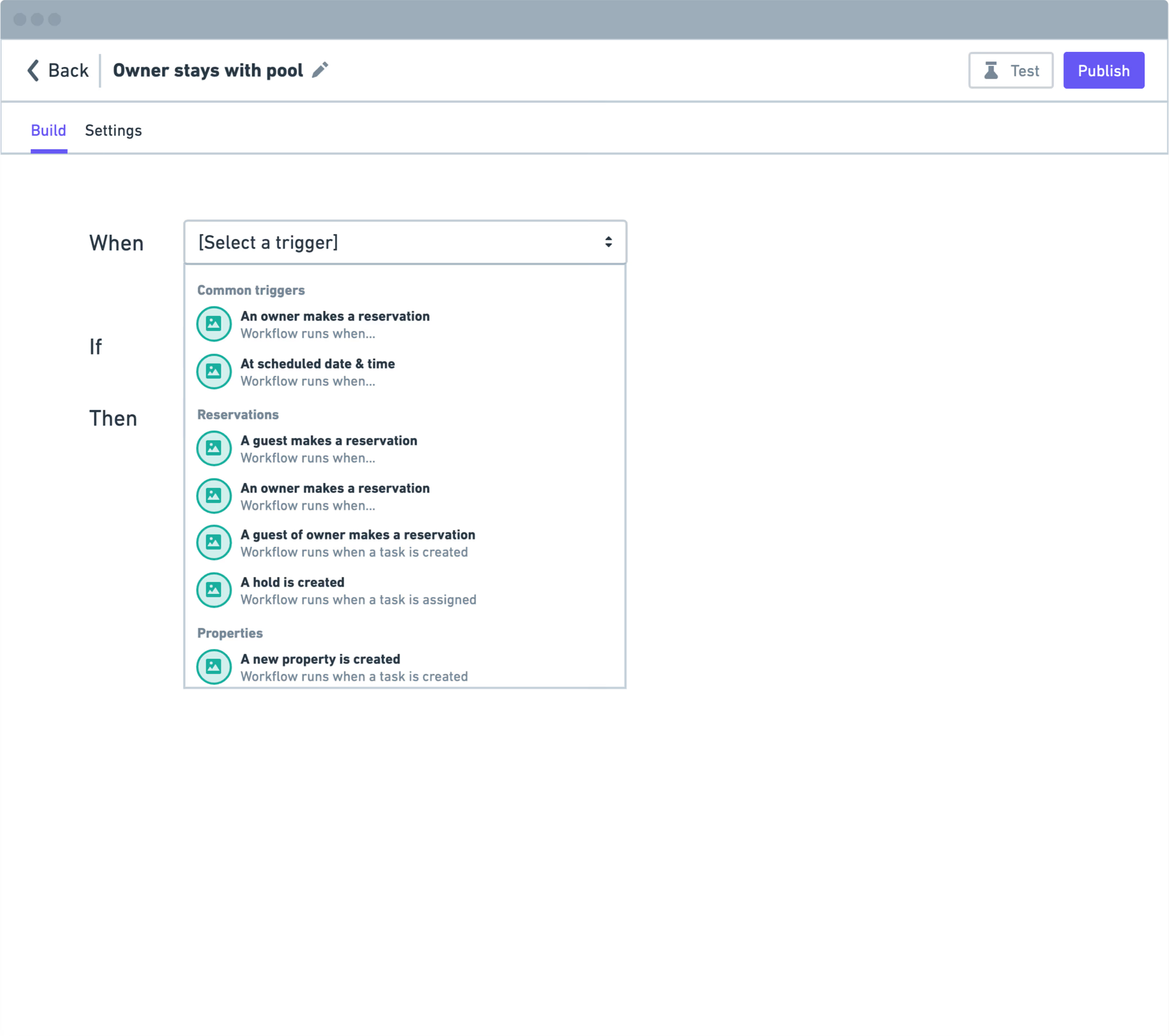

I started with a clean, linear flow. When / If / Then stacked vertically. You selected a trigger from a dropdown to get started. As you added triggers, conditions, and actions to the canvas, more fields would progressively appear.

☝🏼 The product team decided against this version. It was overly flexible in its options. We needed a solution that wouldn't overwhelm users with too much choice where they'd be prone to making mistakes.

Zapier-inspired

This concept was similar to the Intercom version but gave more structure. The workflow's journey and actions were more visibly noticeable. Easier to follow the logic.

☝🏼 The team had similar concerns. Too many options. Plus the AND/OR logic was hard to follow in this form structure. It didn't support connector arrows to Yes or No paths like a flexible canvas would.

Taking accountability

I got too excited about the "what ifs" of automation in our platform. The team reacted with overwhelm and defensiveness. I should've paired it down before presenting. But I still got my point across: We should start with a solution that felt like filling out a form, then layer in complexity as we needed it. I moved on to high-fidelity mockups for the first milestone.

Getting stakeholders aligned

My PM and I ran a collaborative workshop with key stakeholders, CEO included. I presented the automation audit takeaways, a North Star vision, and the Milestone 1 direction. Stakeholders gave feedback using a 'Like / Wish / Wonder' framework in a Kanban board as we presented.

Workshop feedback (kanban)

The feedback was direct. Stakeholders reminded us about our non-technical user base. They'd struggle with overly complex interfaces. The takeaway: simplicity over flexibility. Our users didn't want a power tool. They wanted to be able to jump in and start building rules without the time-consuming hand-holding. This helped us see where alignment existed and where concerns needed addressing.

Key Takeaways from Automation Audit

Key takeaways I identified across 8 platforms that informed our design approach.

⭐️ North Star Vision

I presented a simplified North Star to show where automation could expand beyond task scheduling: messaging, properties, task management itself. Then I showed detailed Milestone 1 mocks as the starting point.

Milestone 1

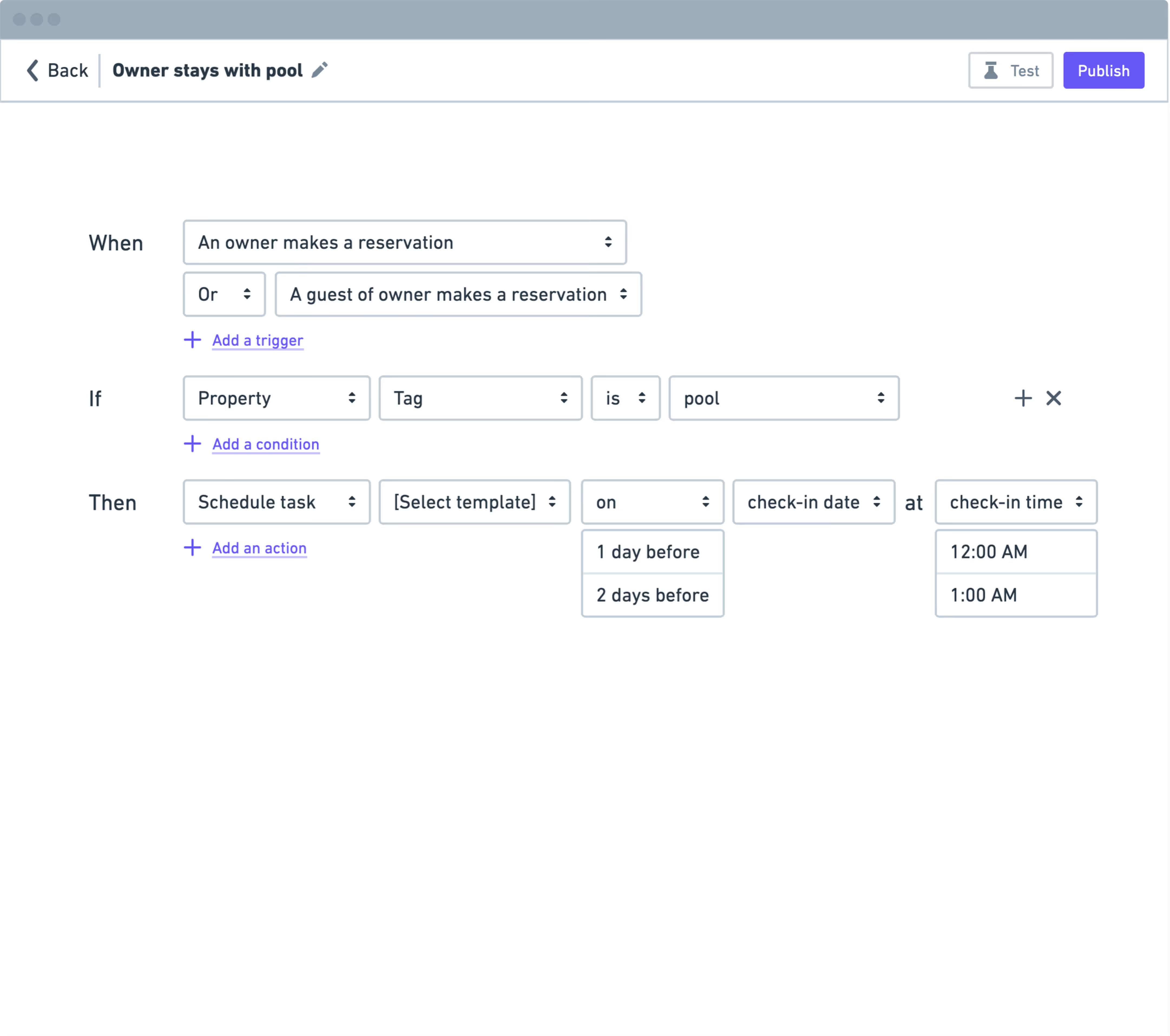

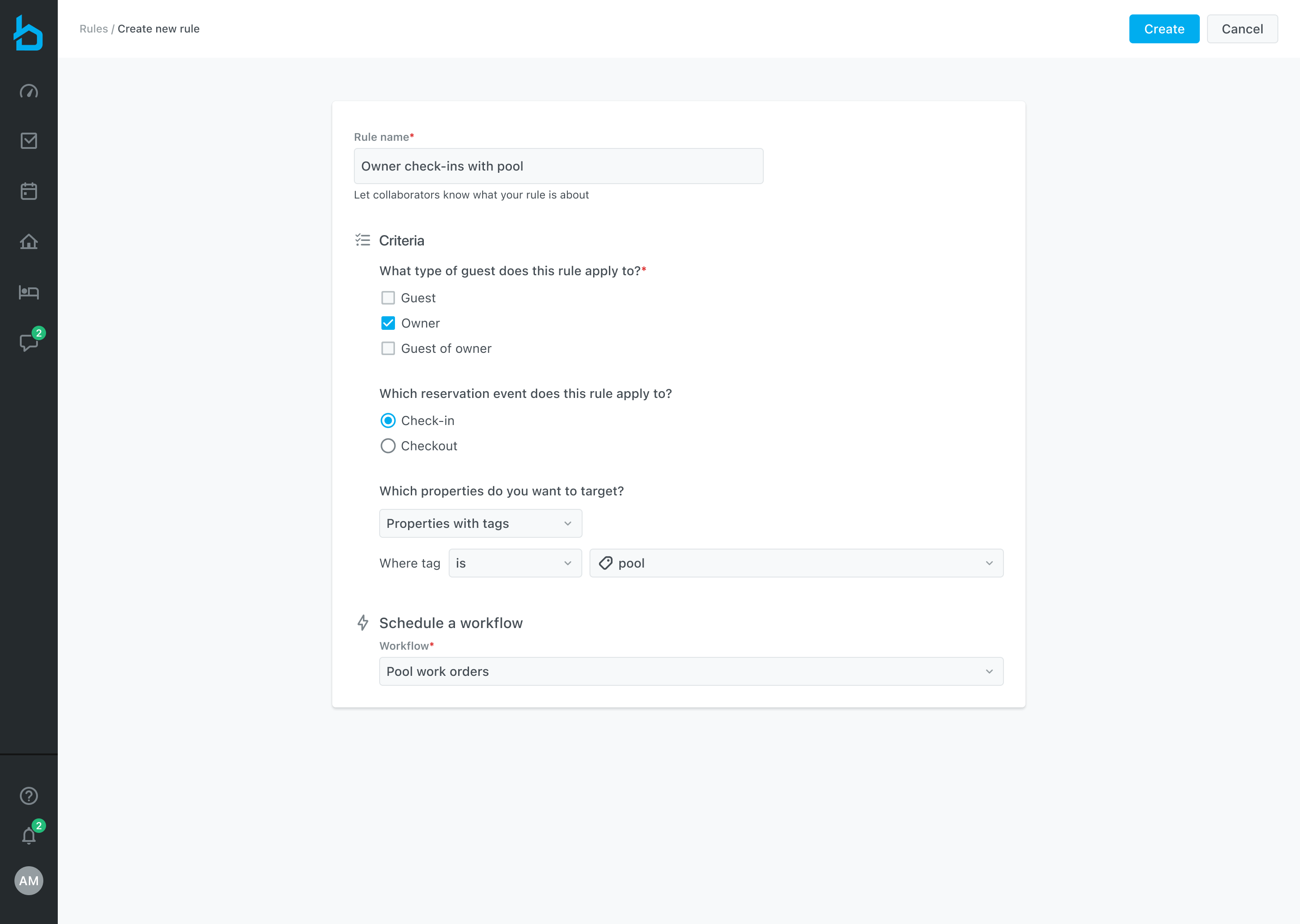

M1 focused on the core logic. Users could create unlimited workflows triggered by precise reservation events: Check-in and Checkout. They'd set basic conditions like guest types, property tags or groups, then pick a workflow to schedule. One workflow per rule. No day offsets yet. No multiple actions yet. Just enough to prove the model worked and replace V1's six-rule limitation.

Caption: The user is creating a pool task automation for owner stays at properties tagged 'pool.' The automation triggers on the check-in day of a standard reservation.

Reactions

Reactions were split. The CEO found M1 super approachable but was concerned that this North Star was too ambitious and unnecessary. Our VP of Product & Design wanted me to push the vision further because M1 didn't feel like it would scale well ("What happens when we need to add more conditions and actions in? Is your form going to get bloated and unusable?"). Most stakeholders—including those that were on the frontlines talking to customers daily—agreed that M1 felt simply approachable for our users.

Finding our model

After the workshop, I met with my PM, VP of Product, and Tech Lead to debrief and figure out next steps.

What people liked:

The node concept from the vision. Nodes felt like building blocks we could add to as needed.

The node concept from the vision. Nodes felt like building blocks we could add to as needed.

What scared them:

When I showed the non-abstracted vision mocks to the team, they pushed back. Clicking nodes prompted flyouts with more options to open. Nodes supported conditional paths with connector arrows. Engineering was hesitant of these features because our existing flyouts were poorly built and fragile. Building new flyouts from scratch would add months.

When I showed the non-abstracted vision mocks to the team, they pushed back. Clicking nodes prompted flyouts with more options to open. Nodes supported conditional paths with connector arrows. Engineering was hesitant of these features because our existing flyouts were poorly built and fragile. Building new flyouts from scratch would add months.

The decision:

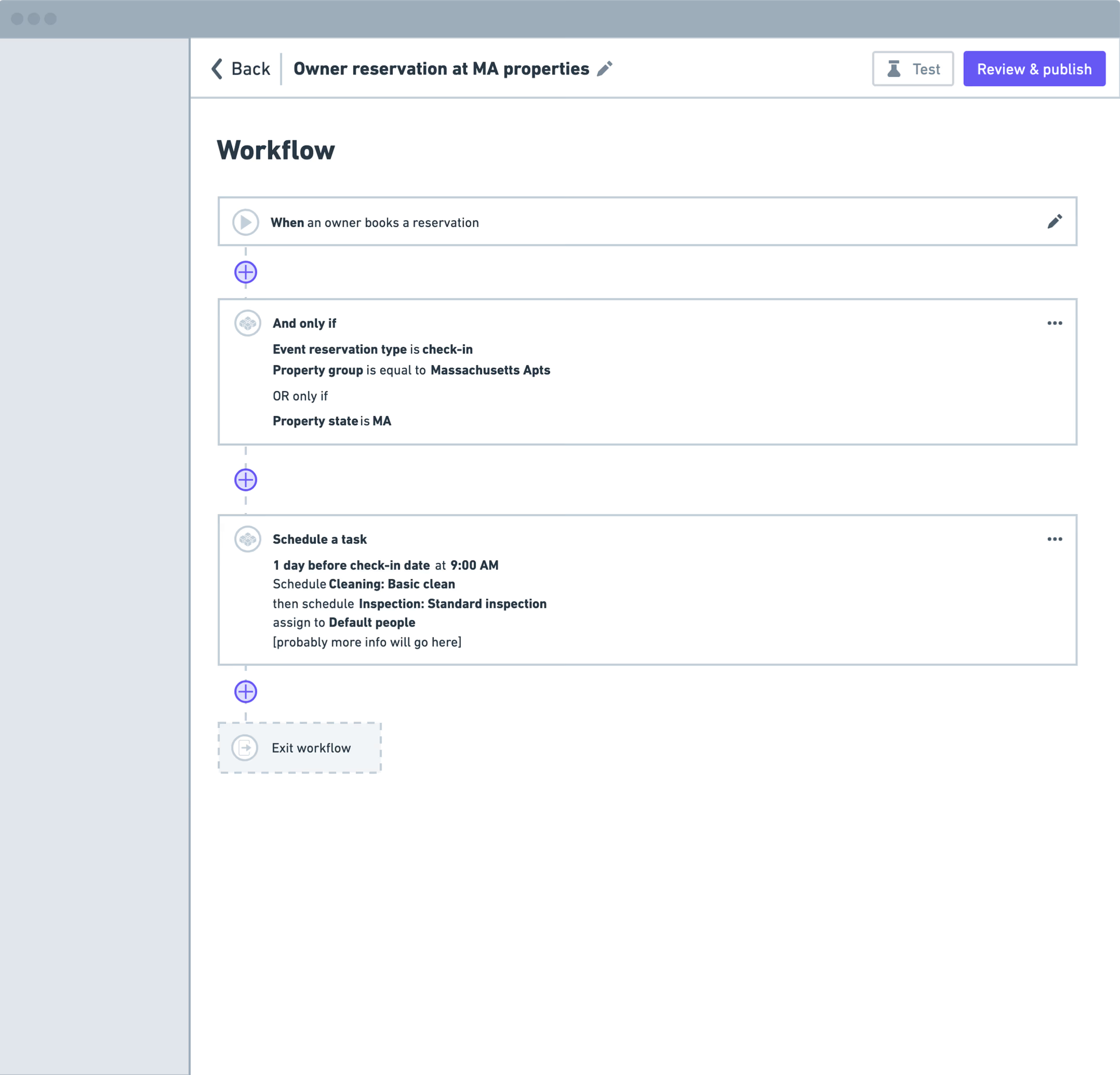

I could have fought for a concept closer to the vision. But getting V1 users testing the core logic mattered more than perfect scalability on day one. The compromise: Ship M1 as a simple form. Structure it as nodes on the backend. Break it into visual nodes in M2 and M3 once we validated the model worked.

I could have fought for a concept closer to the vision. But getting V1 users testing the core logic mattered more than perfect scalability on day one. The compromise: Ship M1 as a simple form. Structure it as nodes on the backend. Break it into visual nodes in M2 and M3 once we validated the model worked.

The outcome:

My VP liked the middle ground. We shipped M1, tested with users, and successfully migrated all V1 accounts. The system covered every common V2 and V3 use case. The design wasn't as scalable as my VP had hoped, but we validated the core model and kept moving. Speed over perfection. The trigger-condition-action model crystallized. We'd roll it out focusing on progressive complexity.

My VP liked the middle ground. We shipped M1, tested with users, and successfully migrated all V1 accounts. The system covered every common V2 and V3 use case. The design wasn't as scalable as my VP had hoped, but we validated the core model and kept moving. Speed over perfection. The trigger-condition-action model crystallized. We'd roll it out focusing on progressive complexity.

Testing Milestone 1

We had strong signal from the workshop and debrief that the core logic would work and allow us to immediately start migrating our V1 customers over to it. Engineering started building immediately. I tested the prototype that I presented in the workshop with four property management clients in parallel to validate assumptions and surface gaps early.

What worked:

The core logic landed immediately. Users understood the rule structure without explanation. One manager said she could 'jump in and make rules easily' and that training her team would be simple.

The core logic landed immediately. Users understood the rule structure without explanation. One manager said she could 'jump in and make rules easily' and that training her team would be simple.

What didn't work:

Testing revealed we were missing occupancy and vacancy-based triggers. These gaps, along with turn support (which we'd already planned to temporarily defer), would need to be addressed in later milestones to avoid blocking adoption for some clients.

Testing revealed we were missing occupancy and vacancy-based triggers. These gaps, along with turn support (which we'd already planned to temporarily defer), would need to be addressed in later milestones to avoid blocking adoption for some clients.

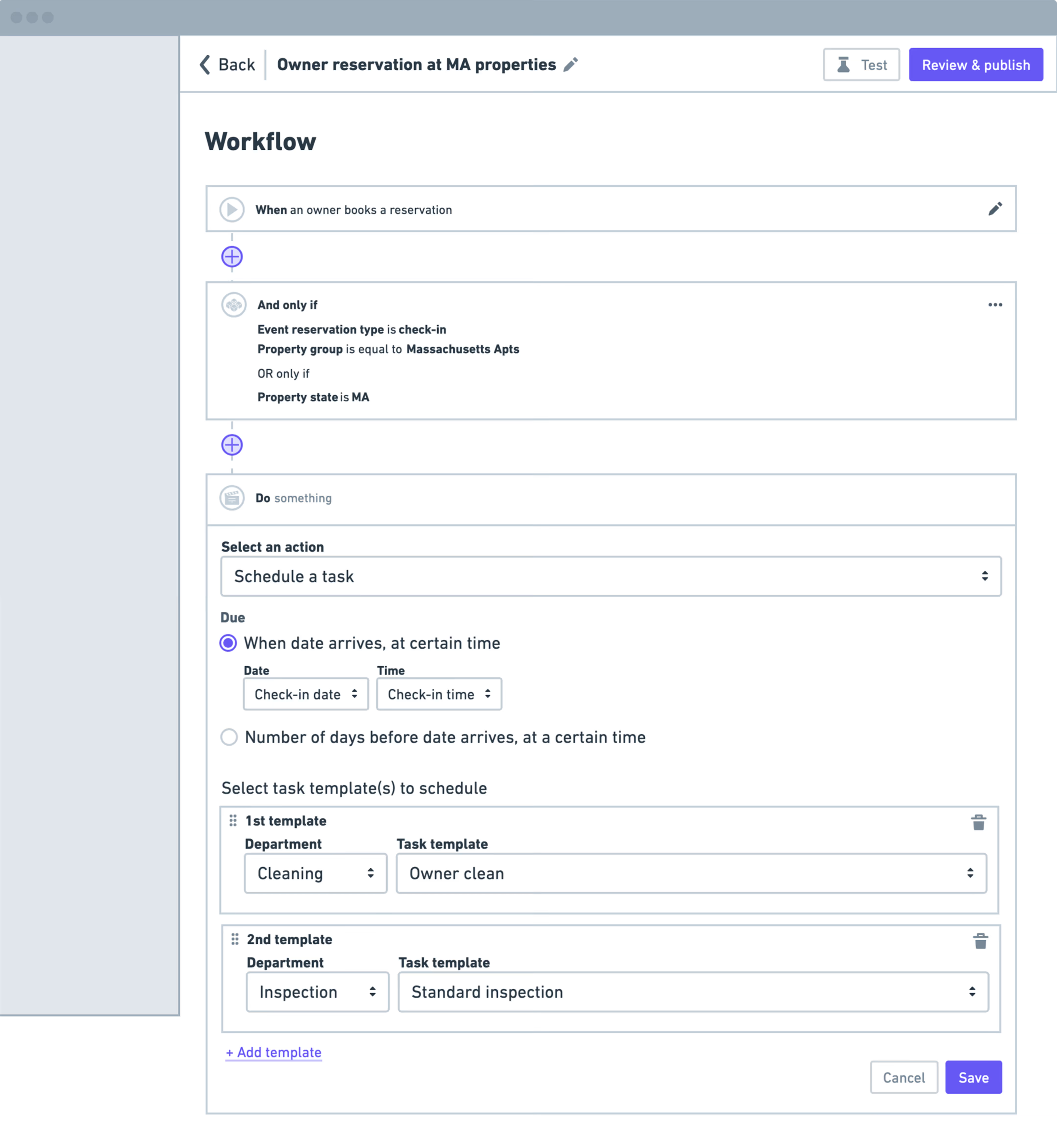

Milestone Progression

M2: Unlocking Turns

Key capabilities unlocked: Nodes and turn reservation support.

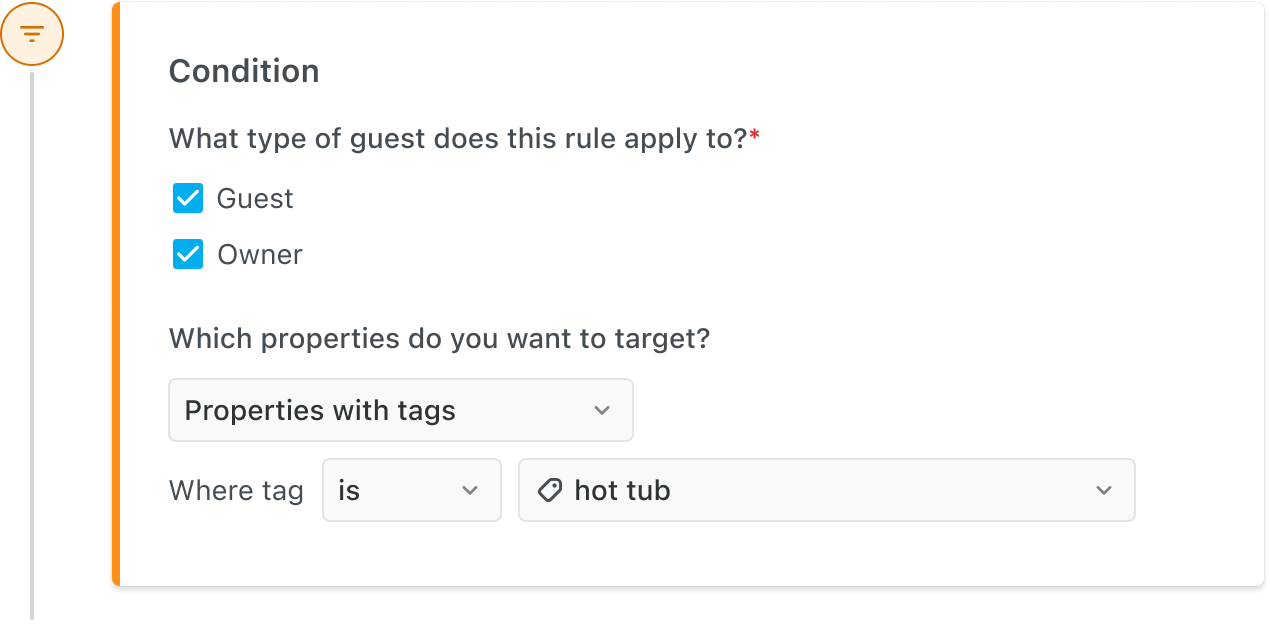

We shifted from a single form to a node-based structure for scalability. This unlocked turn support. Users could schedule tasks on check-in (turn) or checkout (turn) separately.

We shifted from a single form to a node-based structure for scalability. This unlocked turn support. Users could schedule tasks on check-in (turn) or checkout (turn) separately.

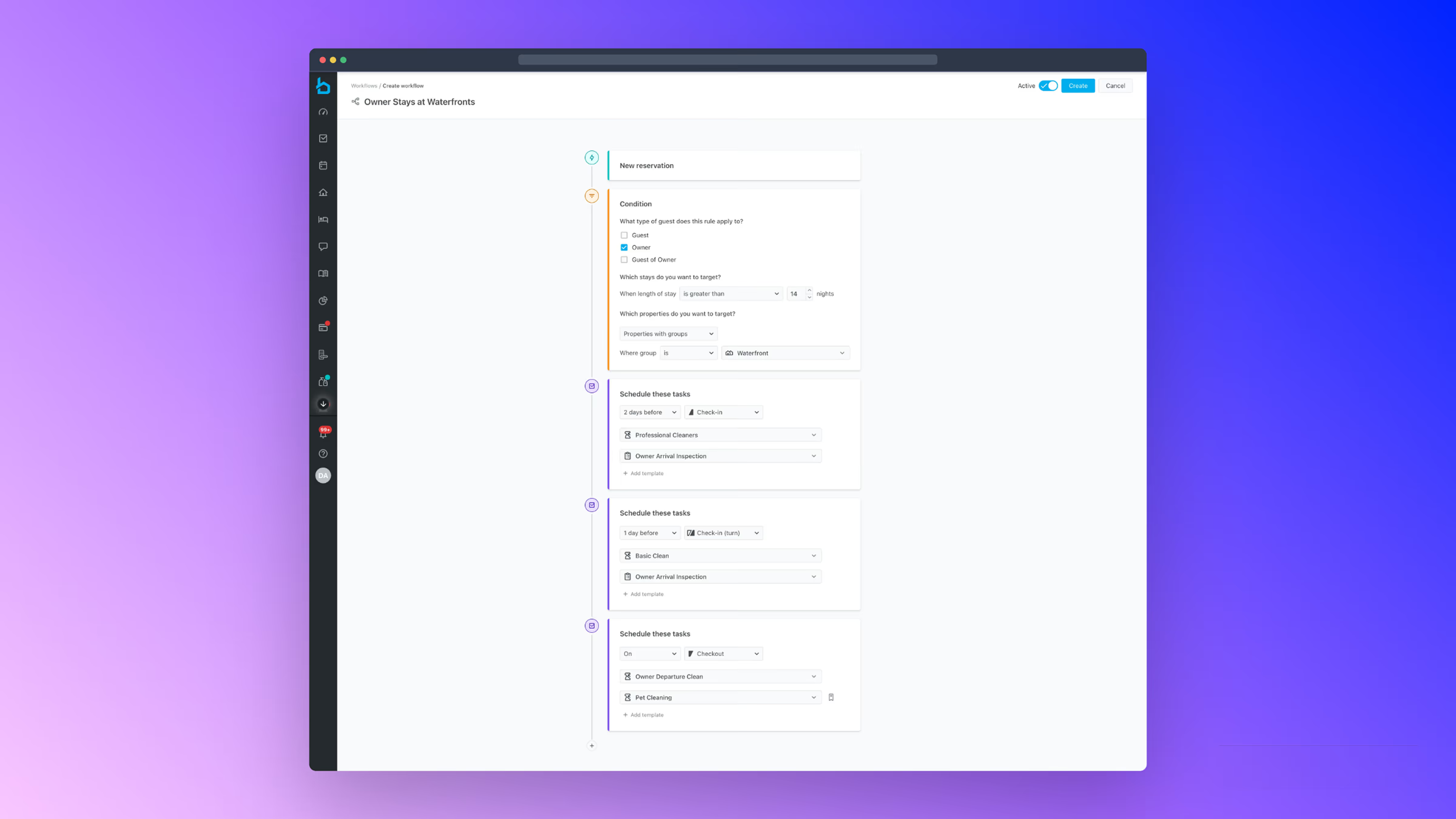

Caption: The user is creating a pool task automation for owner stays at properties tagged 'pool.' The automation triggers on the check-in side of a turn.

Instead of treating turns as a separate event type, we made them qualifiers of check-in or checkout events. Property managers got precise control over when tasks happened during turns.

M3: Multi-Action Nodes & Day Offsets

Key capabilities unlocked: Multi-action nodes, direct template selection, and day offsets (days before check-in/after checkout)

Users could add multiple action nodes and multiple task templates to each action. Day offsets let users schedule tasks relative to reservation events. Instead of only scheduling a task "on check-in," they could now set tasks for "3 days before check-in" or "2 days after checkout." This gave users precise control over task timing.

Users could add multiple action nodes and multiple task templates to each action. Day offsets let users schedule tasks relative to reservation events. Instead of only scheduling a task "on check-in," they could now set tasks for "3 days before check-in" or "2 days after checkout." This gave users precise control over task timing.

Caption: The user is creating a pool task automation for owner stays at properties tagged 'pool.' The automation includes three actions scheduled at different times: 2 days before check-in, 1 day before check-in (turn), and on checkout. At the end, they check the Property Schedule to verify the tasks were applied to the correct properties and reservation events.

Property managers could finally automate:

- Turn on pool heat 2 days before check-in

- Schedule inspection 1 day before check-in (turn)

- Schedule deepclean 1 day after checkout

De-risking migration

Before beta launch, our dedicated white glove support person tested the builder against every common V2 and V3 use case. She'd handled all the white glove requests and knew exactly which patterns clients actually needed versus what they initially asked for.

Her testing confirmed the new tool covered all existing V2 and V3 use cases. She also caught bugs before they could reach client accounts.

Her testing confirmed the new tool covered all existing V2 and V3 use cases. She also caught bugs before they could reach client accounts.

Beta Launch & feature improvements

Once M3 was complete, we launched an open beta. V1 users could self-migrate. V2 and V3 migrations required engineering support. We collected feedback from beta users and Support, which informed our next improvements.

What we added Post-launch

Condition nodes

We separated conditions from triggers to create dedicated space for complex logic like length of stay targeting.

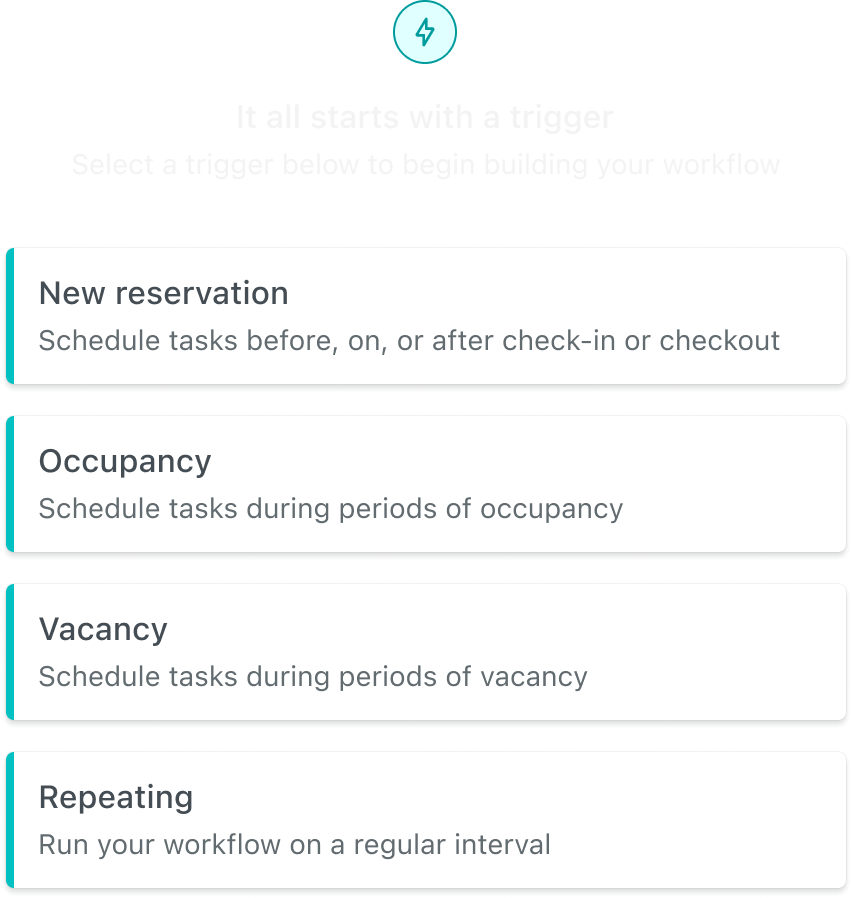

New trigger types

We added Occupancy, Vacancy, and Repeating triggers to expand automation beyond reservation check-in and checkout events.

Reservation data targeting

Users could target automations based on reservation add-ons like "if guest books rollaway bed, schedule delivery task."

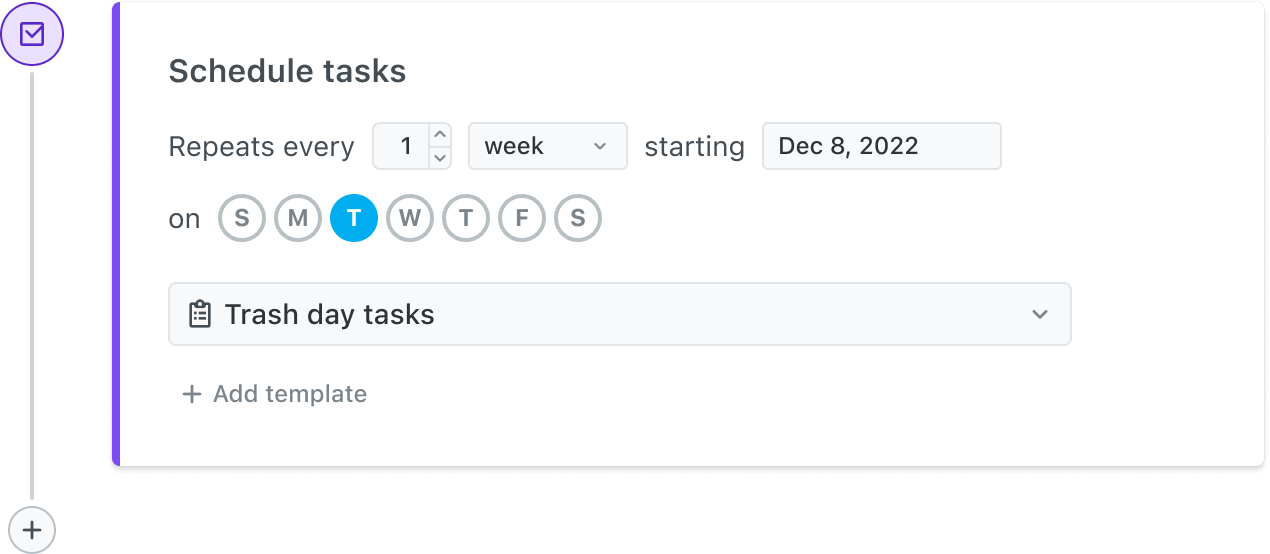

Repeating schedules

Users could set tasks to repeat at regular intervals for ongoing property management needs.

length of stay targeting

Users could create rules based on duration, like "if occupancy is greater than 7 days, schedule mid-stay cleaning."

The Final System: Automated Workflows

After expanding the builder based on beta feedback, we had a system that could replace all three legacy versions. The core trigger, condition, action structure proved flexible enough to handle both simple and sophisticated workflows.

Final Product walkthrough

Example: A property manager creates a workflow for owner stays at waterfront properties.

Context

The focus for this example is on a particular property belonging to the 'Waterfront' property group. Here, the property manager verifies that property is indeed associated with that property group.

Caption: The user clicks on the property name from the Property Schedule calendar to confirm it falls under the 'Waterfront' property group within the property profile.

Creating the workflow

Caption: The user sets up a New Reservation trigger for owner stays at Waterfront properties with stays longer than 3 nights. Three separate actions schedule tasks at different times: 1 day before check-in, 1 day before check-in on turnovers, and on checkout.

Verifying it worked

The workflow runs in the background. The property manager checks the Property Schedule to confirm tasks appeared on the right properties at the right times.

Caption: The user opens the Property Schedule and finds the property from the Waterfront group. The 'Owner Stays at Waterfronts' workflow applied correctly. Tasks show up at the scheduled times relative to the reservation dates.

What Made it Work

What made this system succeed where 3 versions failed:

- Self-service: No more spreadsheets or engineering tickets. Clients could build and edit workflows themselves.

- Visual logic: The node structure made automation transparent. Clients could see how rules would behave before activating them.

- Migration-ready: We systematically covered existing use cases while enabling new ones. We could confidently sunset all three legacy versions.

Beyond Automated Workflows

The node components became part of our design system. The Messaging team adopted them for their Custom Auto Messages feature. I moved the components from my local project file to our design system, wrote up tickets for the Messaging team to add to Storybook, and worked with their engineer on implementation. I also helped their designer update their design to use the standardized component.

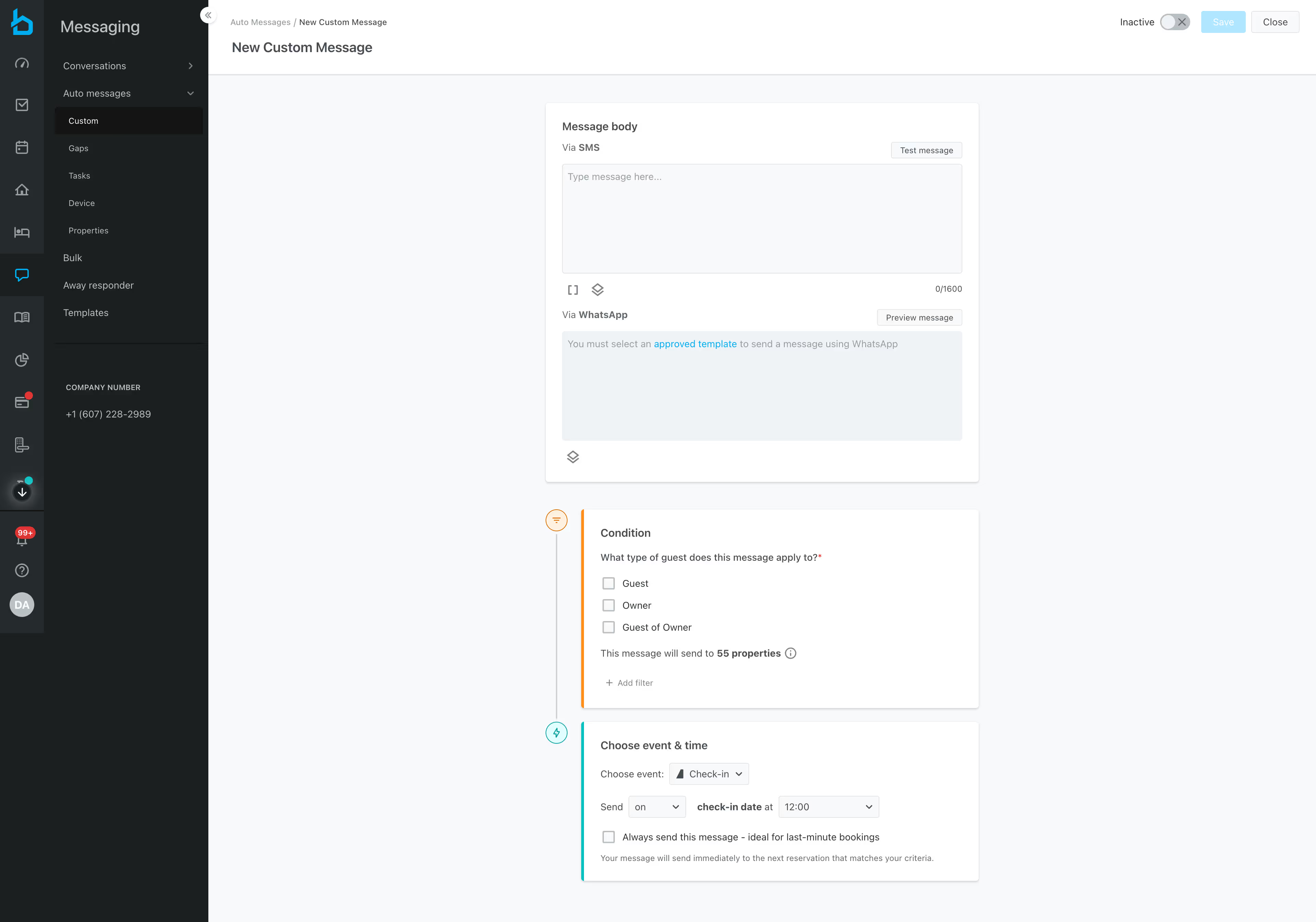

Custom Auto Messages

Same pattern. Different content. One component powering two automation features.

Caption: The Messaging team's Custom Auto Messages using the Condition and Action node components after they were added to the design system.